Tutorial

Physical Layer

Data Link layer

Network Layer

Routing Algorithm

Transport Layer

Application Layer

Network Security

Misc

- Router

- OSI vs TCP/IP

- TCP vs UDP

- Transmission Control Protocol

- TCP port

- IPv4 vs IPv6

- ARP Packet Format

- ARP Table

- Working of ARP

- FTP Client

- FTP Commands

- FTP Server

- I2C Protocol

- Sliding Window Protocol

- SPI Protocol

- IP

- ARP Commands

- ARP

- Address Resolution Protocol

- ARP and its types

- TCP Retransmission

- CAN protocol

- HTTP Status Codes

- HTTP vs HTTPS

- RIP Protocol

- UDP Protocol

- ICMP Protocol

- MQTT protocol

- OSPF Protocol

- Stop and Wait Protocol

- IMAP Protocol

- POP Protocol

- CIFS

- DAS

- DIMM

- iSCSI

- NAS (Network Attached Storage)

- NFS

- NVMe

- SAN

- Border Gateway Protocol

- Go-Back-N ARQ

- RJ Cable

- Difference between Connection-Oriented and Connectionless Service

- CDMA vs. GSM

- What is MAC Address

- Modem vs. Router

- Switch Vs. Router

- USB 2.0 vs 3.0

- Difference between CSMA CA and CSMA CD

- Multiple access protocol- ALOHA, CSMA, CSMA/CA and CSMA/CD

- URI vs URL

- IMAP vs. POP3

- SSH Meaning| SSH Protocol

- UTP vs STP

- Status Code 400

- MIME Protocol

- IP address

- proxy server

- How to set up and use a proxy server

- network security

- WWW is based on which model

- Proxy Server List

- Fundamentals of Computer Networking

- IP Address Format and Table

- Bus topology and Ring topology

- Bus topology and Star topology

- Circuit Switching and Packet switching?

- Difference between star and ring topology

- Difference between Router and Bridge

- TCP Connection Termination

- Image Steganography

- Network Neutrality

- Onion Routing

- Adaptive security appliance (ASA) features

- Relabel-to-front Algorithm

- Types of Server Virtualization in Computer Network

- Access Lists (ACL)

- What is a proxy server and how does it work

- Digital Subscriber Line (DSL)

- Operating system based Virtualization

- Context based Access Control (CBAC)

- Cristian's Algorithm

- Service Set Identifier (SSID)

- Voice over Internet Protocol (VoIP)

- Challenge Response Authentication Mechanism (CRAM)

- Extended Access List

- Li-fi vs. Wi-fi

- Reflexive Access List

- Synchronous Optical Network (SONET)

- Wifi protected access (WPA)

- Wifi Protected Setup (WPS)

- Standard Access List

- Time Access List

- What is 3D Internet

- 4G Mobile Communication Technology

- Types of Wireless Transmission Media

- Best Computer Networking Courses

- Data Representation

- Network Criteria

- Classful vs Classless addressing

- Difference between BOOTP and RARP in Computer Networking

- What is AGP (Accelerated Graphics Port)

- Advantages and Disadvantages of Satellite Communication

- External IP Address

- Asynchronous Transfer Mode (ATM)

- Types of Authentication Protocols

- What is a CISCO Packet Tracer

- BOOTP work

- Subnetting in Computer Networks

- Mesh Topology Advantages and Disadvantages

- Ring Topology Advantages and Disadvantages

- Star Topology Advantages and Disadvantages

- Tree Topology Advantages and Disadvantages

- Zigbee Technology-The smart home protocol

- Network Layer in OSI Model

- Physical Layer in OSI Model

- Data Link Layer in OSI Model

- Internet explorer shortcut keys

- Network Layer Security | SSL Protocols

- Presentation Layer in OSI Model

- Session Layer in OSI Model

- SUBNET MASK

- Transport Layer Security | Secure Socket Layer (SSL) and SSL Architecture

- Functions, Advantages and Disadvantages of Network Layer

- Protocols in Noiseless and Noisy Channel

- Advantages and Disadvantages of Mesh Topology

- Cloud Networking - Managing and Optimizing Cloud-Based Networks

- Collision Domain and Broadcast Domain

- Count to Infinity Problem in Distance Vector Routing

- Difference Between Go-Back-N and Selective Repeat Protocol

- Difference between Stop and Wait, GoBackN, and Selective Repeat

- Network Function Virtualization (NFV): transforming Network Architecture with Virtualized Functions

- Network-Layer Security | IPSec Modes

- Next - Prev Network-Layer Security | IPSec Protocols and Services

- Ping vs Traceroute

- Software Defined Networking (SDN): Benefits and Challenges of Network Virtualization

- Software Defined Networking (SDN) vs. Network Function Virtualization (NFV)

- Virtual Circuits vs Datagram Networks

- BlueSmack Attack in Wireless Networks

- Bluesnarfing Attack in Wireless Networks

- Direct Sequence Spread Spectrum

- Warchalking in Wireless Networks

- WEP (Wired Equivalent Privacy)

- Wireless security encryption

- Wireless Security in an Enterprise

- Quantum Networking

- Network Automation

- Difference between MSS and MTU

- What is MTU

- Mesh Networks: A decentralized and Self-Organizing Approach to Networking

- What is Autonomous System

- What is MSS

- Cyber security & Software security

- Information security & Network security.

- Security Engineer & Security Architect

- Protection Methods for Network Security

- Trusted Systems in Network Security

- What are Authentication Tokens in Network security

- Cookies in Network Security

- Intruders in Network Security

- Network Security Toolkit (NST) in virtual box

- Pivoting-Moving Inside a Network

- Security Environment in Computer Networks

- Voice Biometric technique in Network Security

- Advantages and Disadvantages of Conventional Testing

- Difference between Kerberos and LDAP

- Cyber security and Information Security

- GraphQL Attacks and Security

- Application Layer in OSI Model

- Applications of Remote Sensing

- Seven Layers of IT Security

- What is Ad Hoc TCP

- What is Server Name Indication(SNI)

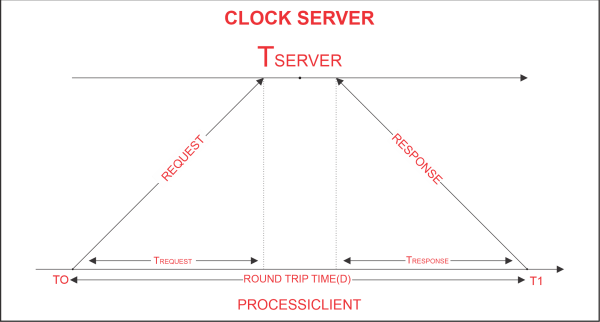

Cristian's Algorithm

Client processes synchronise time with a time server using Cristian's Algorithm, a clock synchronisation algorithm. While redundancy-prone distributed systems and applications do not work well with this algorithm, low-latency networks where round trip time is short relative to accuracy do. The time interval between the beginning of a Request and the conclusion of the corresponding Response is referred to as Round Trip Time in this context.

An example mimicking the operation of Cristian's algorithm is provided below:

Algorithm:

- The process on the client machine sends the clock server a request at time T 0 for the clock time (time at the server).

- In response to the client process's request, the clock server listens and responds with clock server time.

- The client process retrieves the response from the clock server at time T1 and uses the formula below to determine the synchronised client clock time.

TCLIENT = TSERVER plus (T1 - T0)/2.

where TCLIENT denotes the synchronised clock time, TSERVER denotes the clock time returned by the server, T0 denotes the time at which the client process sent the request, and T1 denotes the time at which the client process received the response

The formula above is valid and reliable:

Assuming that the network latency T0 and T1 are roughly equal, T1 - T0 denotes the total amount of time required by the network and server to transfer the request to the server, process it, and return the result to the client process.

The difference between client-side and real-time time is no more than (T1 - T0)/2 seconds. We can infer from the aforementioned statement that the synchronisation error can only be (T1 - T0)/2 seconds at most.

Hence,

Error E [-(T 1 - T 0)/2, (T 1 - T 0)/2]

The Python codes below demonstrate how Cristian's algorithm functions.

To start a clock server prototype on a local machine, enter the following code:

Python

- # Python3 program imitating a clock server

- import socket

- import datetime

- # function used to initiate the Clock Server

- def initiateClockServer():

- s = socket.socket()

- print("Socket successfully created")

- # Server port

- port = 8000

- s.bind(('', port))

- # Start listening to requests

- s.listen(5)

- print("Socket is listening...")

- # Clock Server Running forever

- while True:

- # Establish connection with client

- connection, address = s.accept()

- print('Server connected to', address)

- # Respond the client with server clock time

- connection.send(str(

- datetime.datetime.now()).encode())

- # Close the connection with the client process

- connection.close()

- # Driver function

- if __name__ == '__main__':

- # Trigger the Clock Server

- initiateClockServer()

Output:

Socket successfully created Socket is listening...

On the local machine, the following code runs a client process prototype:

Python

- # Python3 program imitating a client process

- import socket

- import datetime

- from dateutil import parser

- from timeit import default_timer as timer

- # function used to Synchronize client process time

- def synchronizeTime():

- s = socket.socket()

- # Server port

- port = 8000

- # connect to the clock server on local computer

- s.connect(('127.0.0.1', port))

- request_time = timer()

- # receive data from the server

- server_time = parser.parse(s.recv(1024).decode())

- response_time = timer()

- actual_time = datetime.datetime.now()

- print("Time returned by server: " + str(server_time))

- process_delay_latency = response_time - request_time

- print("Process Delay latency: " \

- + str(process_delay_latency) \

- + " seconds")

- print("Actual clock time at client side: " \

- + str(actual_time))

- # synchronize process client clock time

- client_time = server_time \

- + datetime.timedelta(seconds = \

- (process_delay_latency) / 2)

- print("Synchronized process client time: " \

- + str(client_time))

- # calculate synchronization error

- error = actual_time - client_time

- print("Synchronization error : "

- + str(error.total_seconds()) + " seconds")

- s.close()

- # Driver function

- if __name__ == '__main__':

- # synchronize time using clock server

- synchronizeTime()

Output:

Time returned by server: 2018-11-07 17:56:43.302379 Process Delay latency: 0.0005150819997652434 seconds Actual clock time at client side: 2018-11-07 17:56:43.302756 Synchronized process client time: 2018-11-07 17:56:43.302637 Synchronization error : 0.000119 seconds

We can define a minimum transfer time that we can use to create an improved synchronisation clock time through iterative testing over the network (less synchronisation error).

The server time will always be generated after T0 + T min in this case, and TSERVER will always be generated before T1 - Tmin, where T min is the minimum transfer time, which is the minimum value of TREQUEST and TRESPONSE during several iterative tests. Here, a formulation of the synchronisation error is as follows:

Error E [-((T1 - T0)/2-Tmin), ((T1 - T0)/2-Tmin)]

Similar to this, if TREQUEST and TRESPONSE differ by a significant amount of time, TMIN1 and TMIN2 may be used in place of TMIN1 and TMIN2, respectively, where TMIN1 denotes the minimum observed request time and TMIN2 denotes the minimum observed response time over the network.

In this scenario, the synchronised clock time can be calculated as follows:

(T1 - T0)/2 + (Tmin2 - Tmin1)/2 +TSERVER = TCLIENT

Therefore, we can improve clock time synchronisation and subsequently reduce the overall synchronisation error by simply introducing response and request time as separate time latencies. The total clock drift that is seen will determine how many iterative tests need to be performed.